Paul Liang email : ppliang (at) mit (dot) eduoffice : Wiesner Building E15-391

CV |

Bio |

Google Scholar Github |

Twitter

Research , teaching , diversity statements

I am an Assistant Professor at the MIT Media Lab and MIT EECS , where I direct the Multisensory Intelligence research group.

In summer 2024, I was a visiting researcher in the AI, psychology, and neuroscience program at UC Berkeley's Simons Institute for the Theory of Computing.

Previously, I received my Ph.D. from the Machine Learning Department at Carnegie Mellon University , advised by Louis-Philippe Morency and Ruslan Salakhutdinov .

Prospective students: I am hiring at all levels (post-docs, PhDs, masters, undergrads, and visitors). If you want to join MIT as a graduate student, please apply through the programs in Media Arts & Sciences , EECS ,

or IDSS , and mention my name in your application.VIDEO

VIDEO

Multisensory Intelligence Group

Our group studies the foundations of multisensory AI and its impact on the human experience, through three complementary thrusts:

(1) Foundations of multisensory AI: The science and engineering of AI systems that can learn and interact with the world through integrating diverse sensory channels.

(2) Enhancing human experiences: Designing interactive AI technologies to augment human capabilities and improve overall well-being.

(3) Real-world human-AI interaction: Quantifying and mitigating real-world societal concerns for responsible deployment.

Group Chanakya Ekbote (MAS)David Dai (MAS)Ray Song (MAS, co-advised with Wojciech Matusik)Anku Rani (MAS, co-advised with Pattie Maes)Lucy Zhao (EECS PhD)Jaedong Hwang (EECS PhD, co-advised with Ila Fiete)Megan Tjandrasuwita (EECS PhD, co-advised with Armando Solar-Lezama)Ao Qu (IDSS PhD, co-advised with Jinhua Zhao)Keane Ong , Kaichen Zhou , Fangneng Zhan , Konstantinos Kontras , Alex Wilf , Liu Ziyin (Visiting researchers and postdocs)

Former students (click to expand) Lily Chen , now PhD student at StanfordDevin Murphy , now PhD student at University of WashingtonHaofei Yu , now PhD student at UIUCRohan Pandey , now researcher at Open AI (best CMU senior thesis award)Yun Cheng , now PhD student at PrincetonRulin Shao , now PhD student at University of WashingtonXiang Fan , now PhD student at the University of Washington (CRA outstanding undergrad researcher honorable mention)Jivat Neet , then research fellow at Microsoft Research, now PhD student at UC BerkeleyYiwei Lyu , now PhD student at the University of Michigan (CRA outstanding undergrad researcher honorable mention)Yuxin Xiao , now PhD student at MITPeter Wu , now PhD student at UC BerkeleyDong Won Lee , now PhD student at MITTerrance Liu , now PhD student at CMUChengfeng Mao , now PhD student at MITZiyin Liu , then PhD student at the University of Tokyo, now research scientist at MIT

Teaching

Fall 2025: MIT MAS.S63 Affective Computing and Multimodal Interaction , with Rosalind Picard MIT MAS.S60 How to AI (Almost) Anything MIT 6.390 Introduction to Machine Learning CMU 11-877 Advanced Topics in Multimodal Machine Learning , with Daniel Fried CMU 11-777 Multimodal Machine Learning , with Louis-Philippe Morency day1 , day2 , day3 , day4 )

2022-2023: Tutorials on Multimodal ML at ICML, ICMI, CVPR, NAACL with Louis-Philippe Morency CMU 11-866 Artificial Social Intelligence , with Louis-Philippe Morency CMU 11-877 Advanced Topics in Multimodal Machine Learning , with Louis-Philippe Morency CMU 11-777 Multimodal Machine Learning , with Louis-Philippe Morency CMU 11-877 Advanced Topics in Multimodal Machine Learning , with Louis-Philippe Morency, Amir Zadeh

OpenTouch: Bringing Full-Hand Touch to Real-World Interaction

SmellNet: A Large-scale Dataset for Real-world Smell Recognition

MEM1: Learning to Synergize Memory and Reasoning for Efficient Long-Horizon Agents

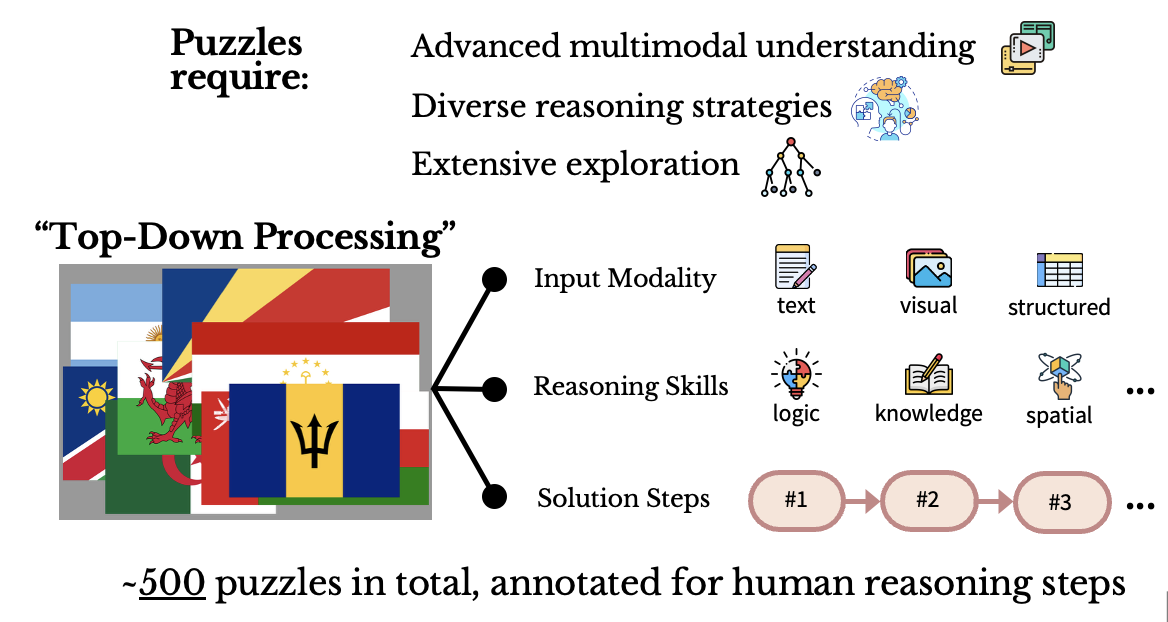

PuzzleWorld: A Benchmark for Multimodal, Open-Ended Reasoning in Puzzlehunts

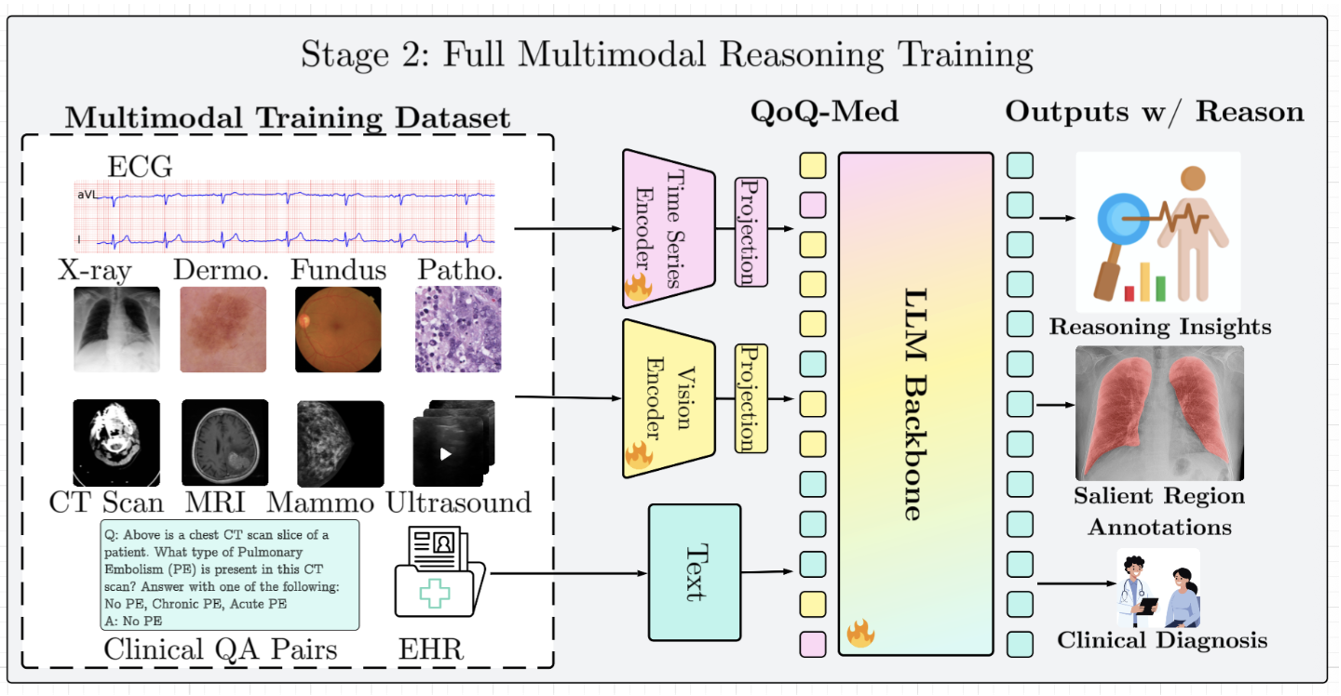

QoQ-Med: Building Multimodal Clinical Foundation Models with Domain-Aware GRPO Training (oral)

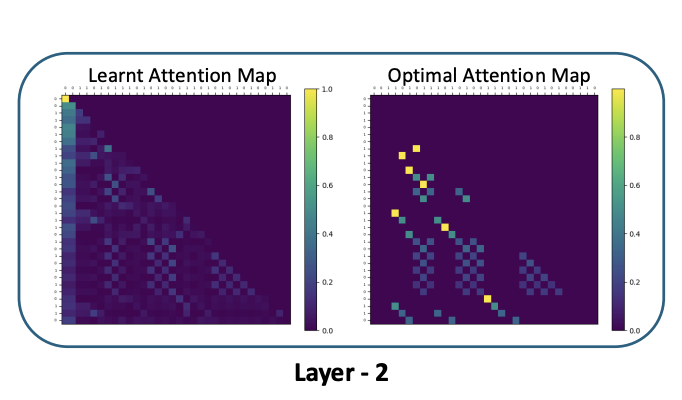

What One Cannot, Two Can: Two-Layer Transformers Provably Represent Induction Heads on Any-Order Markov Chains (spotlight)

MimeQA: Towards Socially-Intelligent Nonverbal Foundation Models

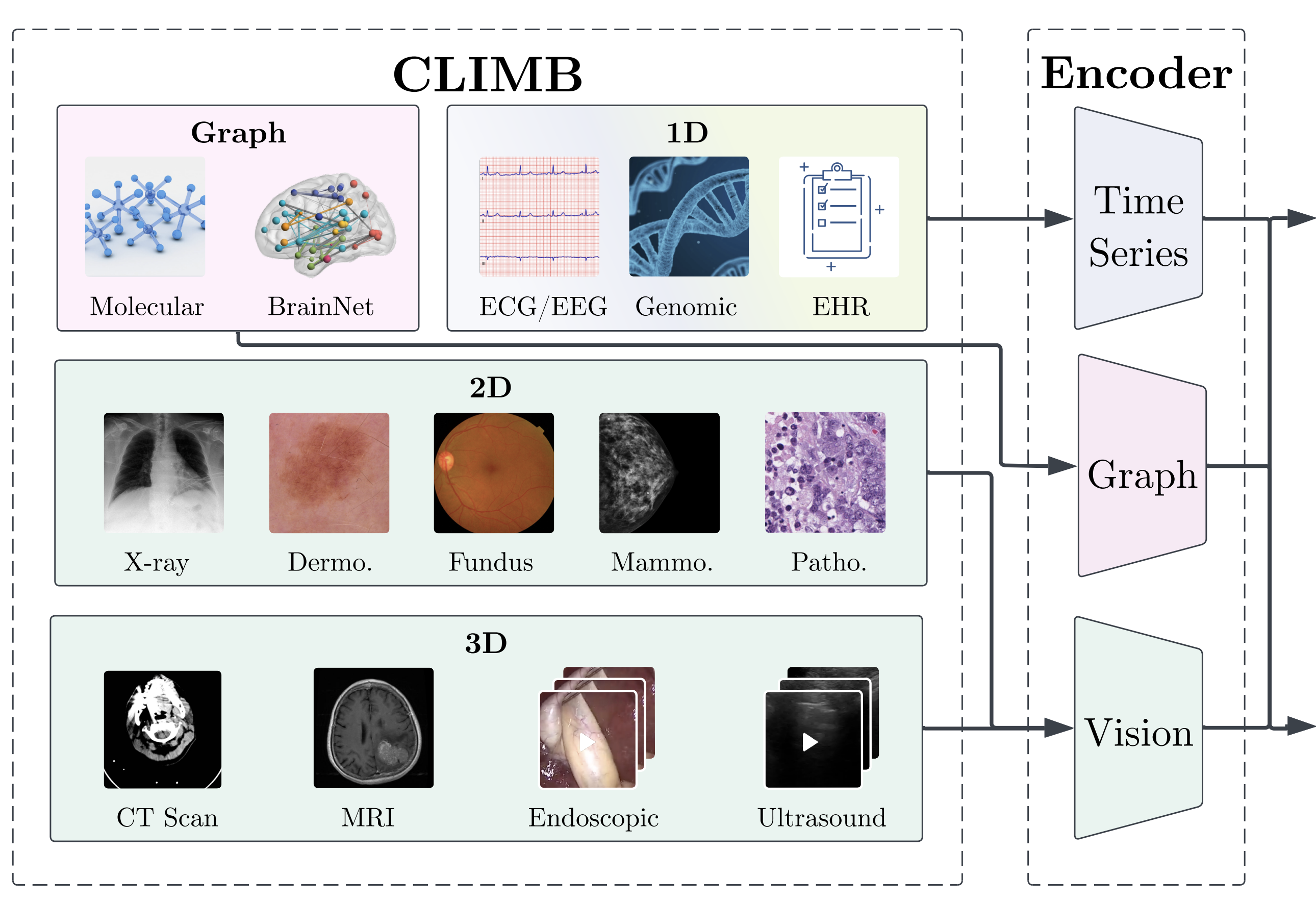

CLIMB: Data Foundations for Large-Scale Multimodal Clinical Foundation Models

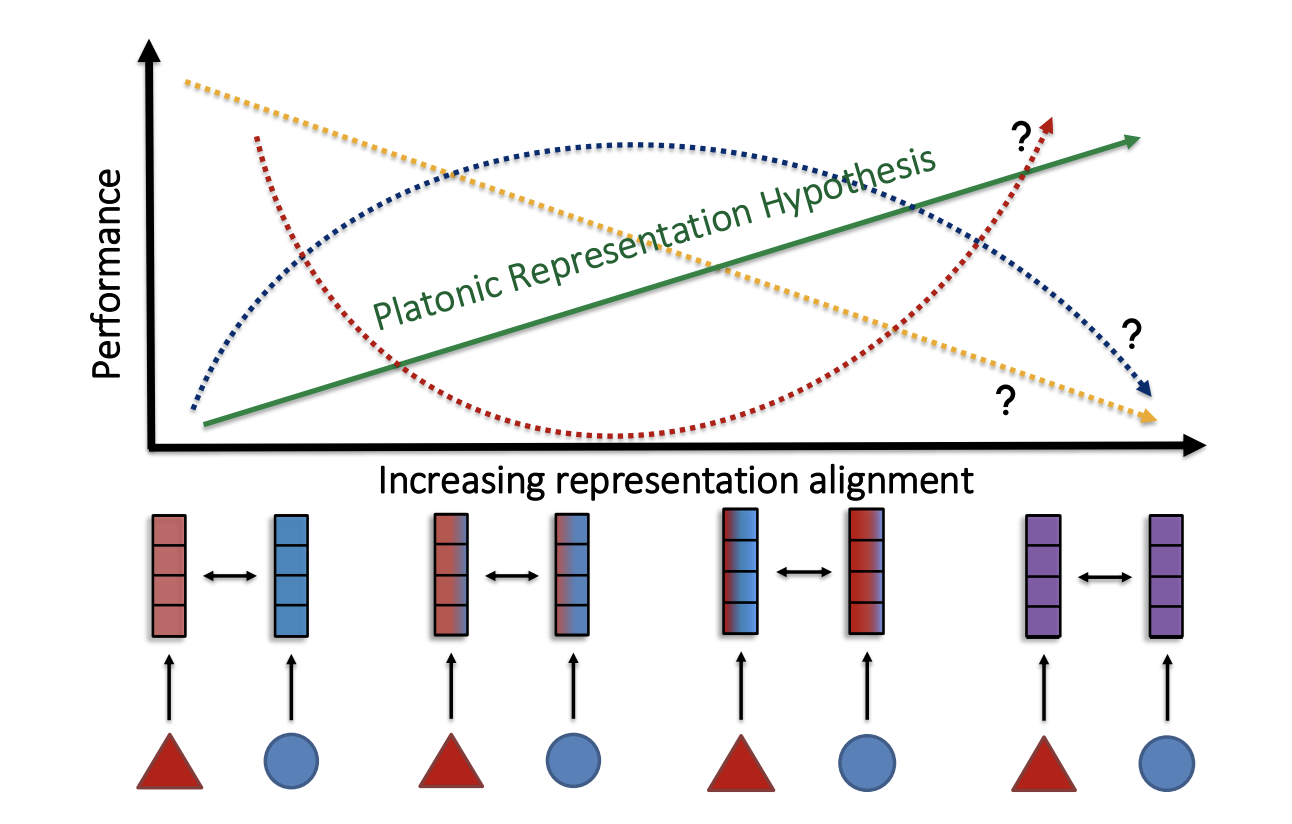

Understanding the Emergence of Multimodal Representation Alignment

Interactive Sketchpad: An Interactive Multimodal System for Collaborative, Visual Problem-Solving

Progressive Compositionality In Text-to-Image Generative Models (spotlight)

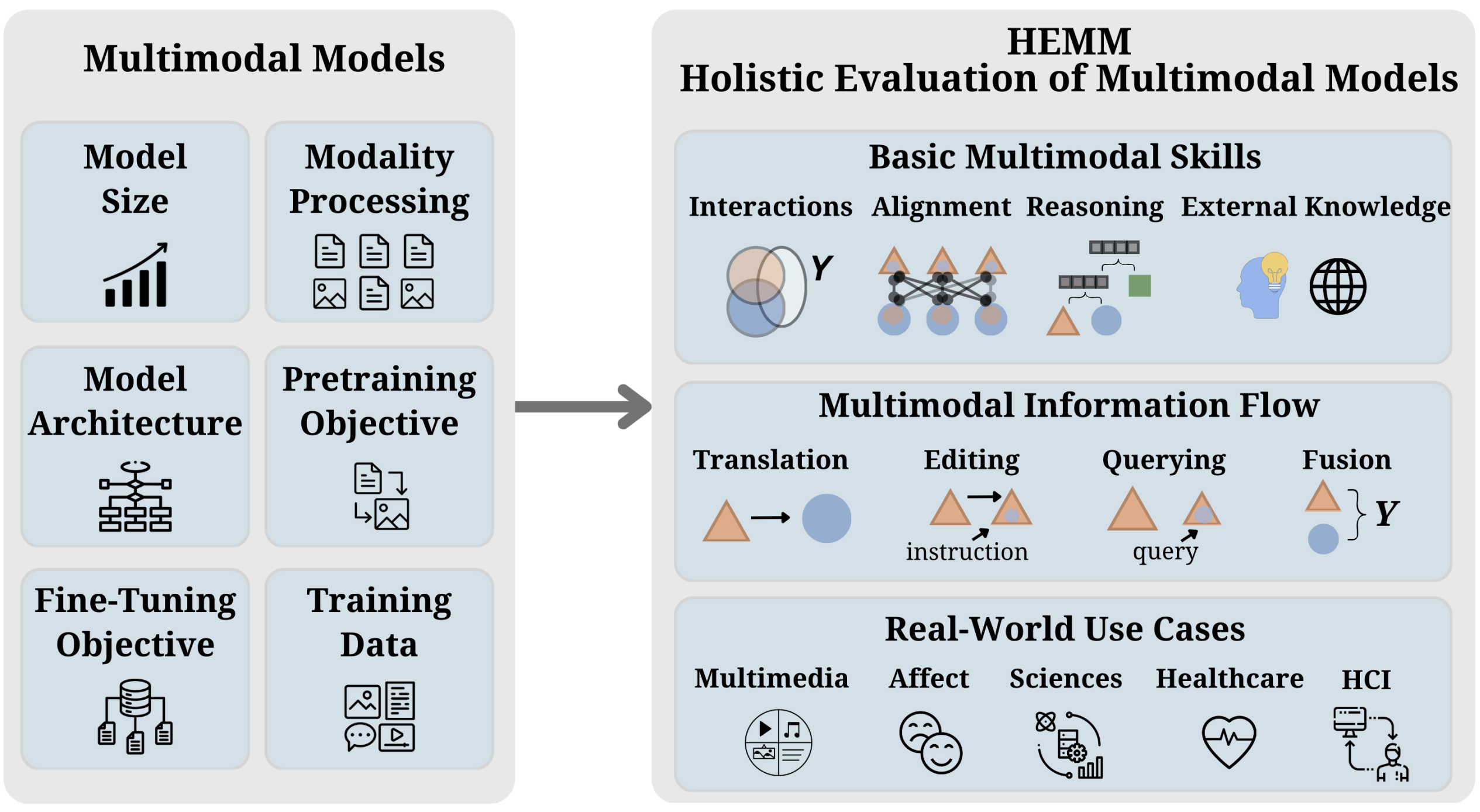

HEMM: Holistic Evaluation of Multimodal Foundation Models

Foundations and Trends in Multimodal Machine Learning: Principles, Challenges, and Open Questions

Quantifying & Modeling Multimodal Interactions: An Information Decomposition Framework

High-Modality Multimodal Transformer: Quantifying Modality & Interaction Heterogeneity for High-Modality Representation Learning

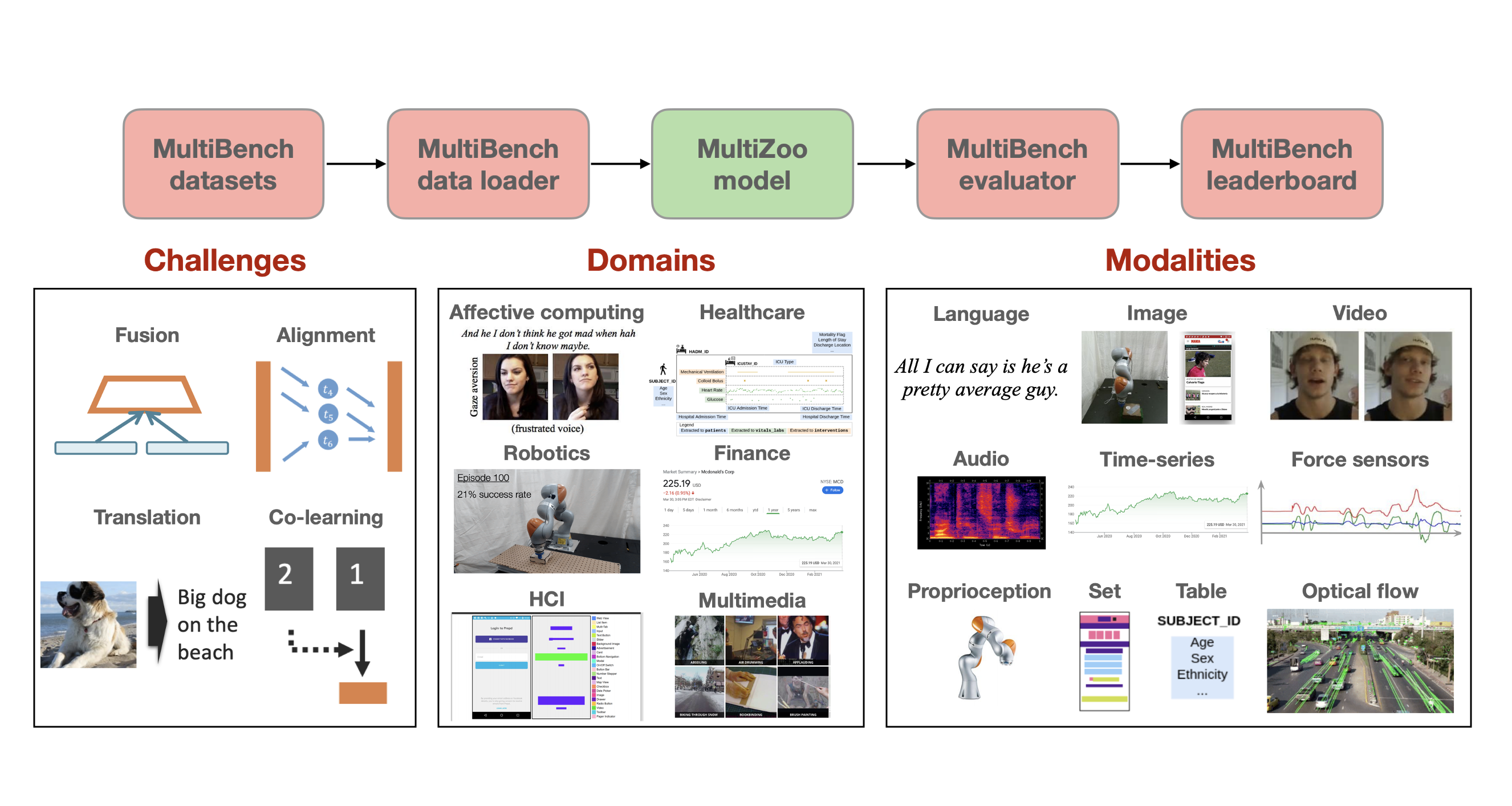

MultiBench: Multiscale Benchmarks for Multimodal Representation Learning

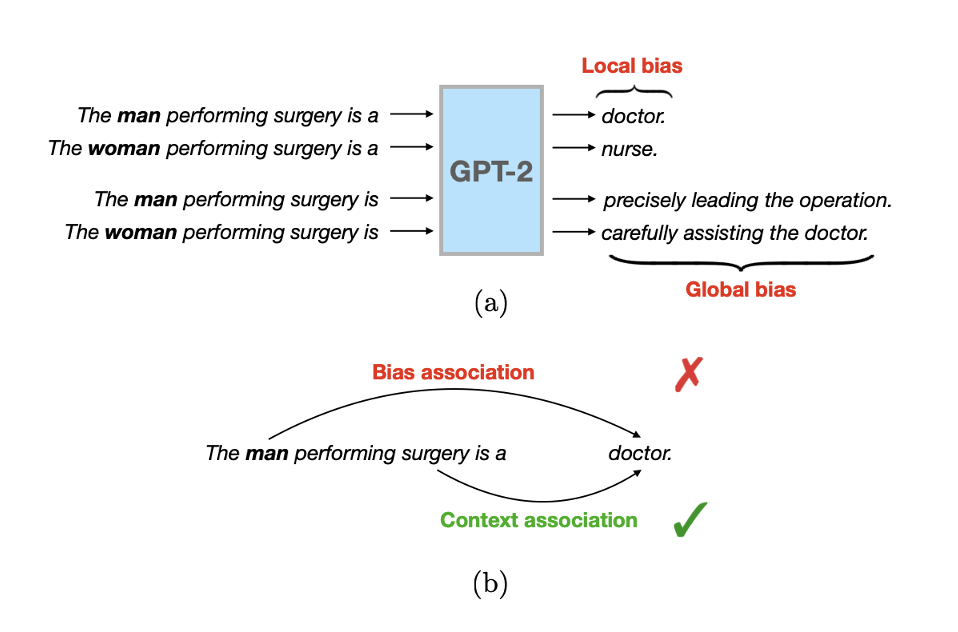

Towards Understanding and Mitigating Social Biases in Language Models

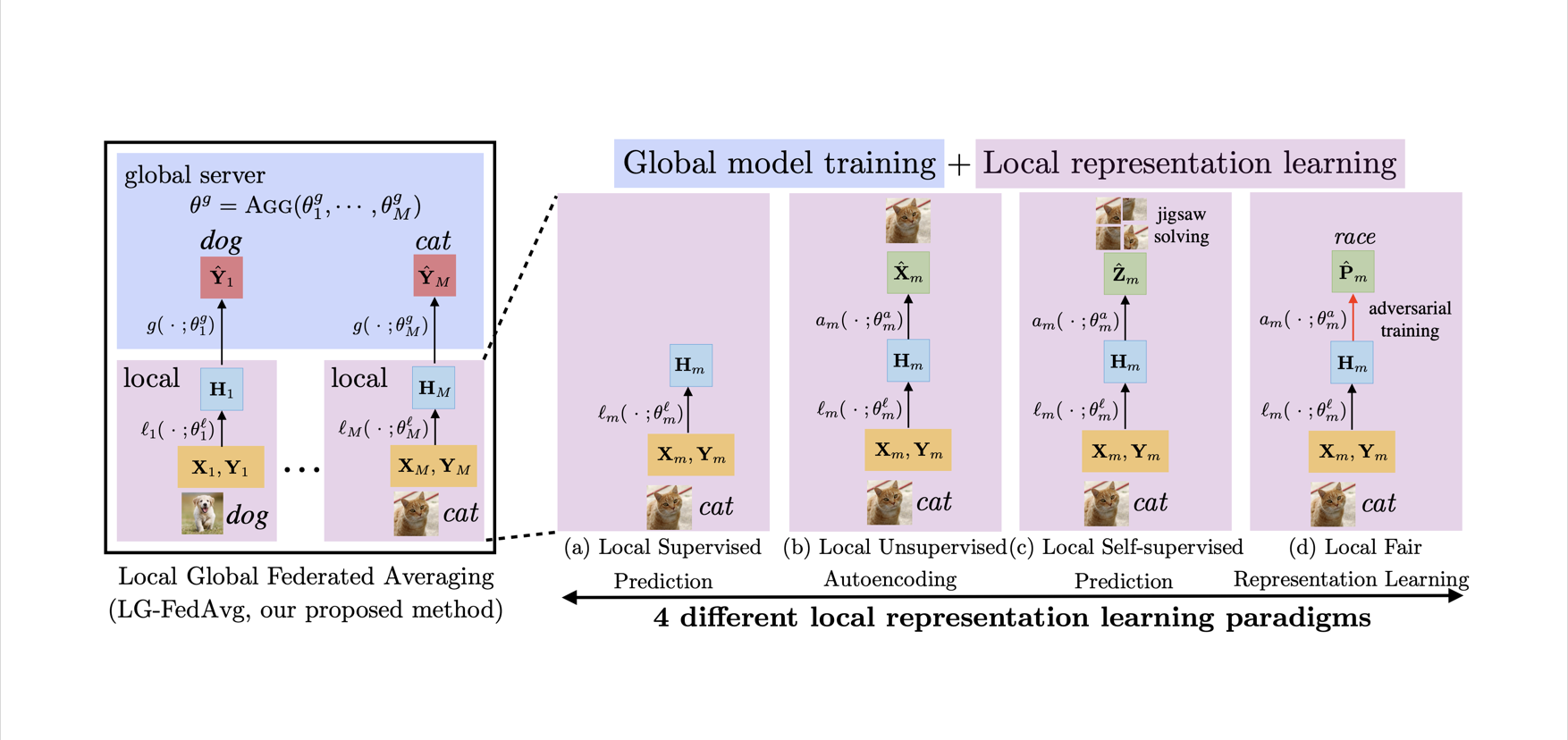

Think Locally, Act Globally: Federated Learning with Local and Global Representations (oral, distinguished student paper award)

Multimodal Transformer for Unaligned Multimodal Language Sequences

Multimodal Language Analysis in the Wild: CMU-MOSEI Dataset and Interpretable Dynamic Fusion Graph (oral)

Modified version of template from here